Deep Dive into Amazon's ena driver in the Linux Kernel

— Linux, Kernel, Driver, Network — 6 min read

This post looks at how the ENA (which stands for Elastic Network Adapter) driver works in Linux Kernel 5.5.0-rc7, and in doing so, also discusses Linux Kernel Networking subsystem's internal working.

Background

Amazon announces the Elastic Network Adapter in mid-2016, which aims to provide networking that features such as reduced latency, increased packet rate, and reduced variability. One of its selling points is that ENA allows the machines network bandwidth to scale well with the number of vCPU, through Multi-Queue Device Interface and Receive-Side Steering, and reduce computation load through hardware-based checksum generation.

The driver of the Elastic Network Adapter has been included in the mainline Kernel since 4.9, and is required to enable Enhanced Networking on AWS.

Note: ENA's hardware interface actually looks quite similar to that of NVMe, with Admin Queue, paired Submission/Completion Queue, and Doorbell Registers.

Show me the Code

The mainlined source code for the ENA driver can be found inside drivers/net/ethernet/amazon/ena/, whereas the latest version of the driver can be found in amzn/amzn-drivers.

Again, this post focus on version 5.5.0-rc7 of the Linux Kernel.

Note: Source code shown in this article has been truncated and re-ordered for demonstration purposes (mostly omitting error handling details), thus may not match the source code you see.

Module Initialization

The entry-point to the ena module is ena_init, called when it is loaded, which then creates a workqueue with create_singlethread_workqueue (which is now deprecated in favor of alloc_workqueue) named ena, and register the ena driver defined in ena_pci_driver with the PCI Bus subsystem.

1static struct pci_driver ena_pci_driver = {2 .name = DRV_MODULE_NAME,3 .id_table = ena_pci_tbl,4 .probe = ena_probe,5 .remove = ena_remove,6 // Power management functionality omitted7 .sriov_configure = pci_sriov_configure_simple,8};The two things that matter the most at this point are the id_table and probe function. id_table is defined in ena_pci_id_tbl.h, and tells the PCI Bus Subsystem the PCI Device IDs that this driver is interested in, which can be found in the documentation under "Supported PCI vendor ID/device IDs."

1// Vendor ID omitted2#define PCI_DEV_ID_ENA_PF 0x0ec23#define PCI_DEV_ID_ENA_LLQ_PF 0x1ec24#define PCI_DEV_ID_ENA_VF 0xec205#define PCI_DEV_ID_ENA_LLQ_VF 0xec21Device Setup

The probe pointers point to the ena_probe function, which is in charge of initializing the adapter. When the PCI Bus Subsystem encounters a PCI Device that matches the IDs of ena driver, ena_probe is called.

ena_probe is a complex function that spans more than 200 lines of code. We follow the ideal case (no error) and break it up so its easier to understand.

1static int ena_probe(struct pci_dev *pdev, const struct pci_device_id *ent)2{3 // Codes omitted for brevity4 pci_enable_device_mem(pdev);5 pci_set_master(pdev);6 ena_dev = vzalloc(sizeof(*ena_dev));7 bars = pci_select_bars(pdev, IORESOURCE_MEM) & ENA_BAR_MASK;8 pci_request_selected_regions(pdev, bars, DRV_MODULE_NAME);9 ena_dev->reg_bar = devm_ioremap(&pdev->dev,10 pci_resource_start(pdev, ENA_REG_BAR),11 pci_resource_len(pdev, ENA_REG_BAR));12

13 ena_dev->dmadev = &pdev->dev;14 // ...15}First and foremost, pci_enable_device_memory is called to enable the device, it wakes up the device and all its upstream PCI (if any), followed by other task needed for PCI device, which mostly related to BAR, (a.k.a. Base Address Register), such as mapping the BAR to an address usable by the device.

Then ena_device_init is called to do the actual device initialization, including resetting of the device, as well as initializing the Admin Queue and Asynchronous Event Notification Queue.

ena_netdev_ops is set as the netdev_ops on the netdev structure, which registers the following operations.

1static const struct net_device_ops ena_netdev_ops = {2 .ndo_open = ena_start_xmit,3 .ndo_stop = ena_close,4 .ndo_start_xmit = ena_start_xmit,5 .ndo_select_queue = ena_select_queue,6 .ndo_get_stats64 = ena_get_stats64,7 .ndo_tx_timeout = ena_tx_timeout,8 .ndo_change_mtu = ena_change_mtu,9 .ndo_set_mac_address = NULL,10 .ndo_validate_addr = eth_validate_addr,11};Note: ENA driver doesn't provide a way to set the MAC Address of the interface; perhaps this is for security reasons?

It also setup a timer service runs background for bookkeeping purposes.

1timer_setup(&adapter->timer_service, ena_timer_service, 0);Interface Bring-up

When the associated network interface is activated, ena_open is called, which in turn calls ena_up.

ena_up will setup the transmission and reception driver queues with create_queues_with_size_backoff, setting ena_intr_msix_io as the interrupt handler for all the queues (but each queue has different data associated), as well as enabling interrupt (with SMP Affinity set) in ena_request_io_irq.

NAPI Setup

ena_intr_msix_io itself does little work. It merely calls napi_schedule_irqoff, which schedules ena_io_poll to be called during Soft IRQ processing. The NAPI setup occurs when ena_up calls ena_init_napi,

1static void ena_init_napi(struct ena_adapter *adapter)2{3 struct ena_napi *napi;4 int i;5

6 for (i = 0; i < adapter->num_io_queues; i++) {7 napi = &adapter->ena_napi[i];8

9 netif_napi_add(adapter->netdev,10 &adapter->ena_napi[i].napi,11 ena_io_poll,12 ENA_NAPI_BUDGET);13 napi->rx_ring = &adapter->rx_ring[i];14 napi->tx_ring = &adapter->tx_ring[i];15 napi->qid = i;16 }17}Data Path

The actual transmission and reception of packets from/to the system are by the adapter through direct memory access (a.k.a DMA) on the main memory.

What the driver does are writing/reading packet descriptors that tell the adapter where to write/read packet data.

Transmission

The transmission of data starts at ena_start_xmit, which is registered as the transmission handler within ena_netdev_ops.

Note: Only the Regular mode (as opposed to Low Latency Queue Mode, a.k.a. LLQ ) of operation is discussed.

Submission

ena_start_xmit is given the data to transfer, encapsulated in struct sk_buff, from upper layers in the network stack. It firsts find the queue that the socket buffer belongs to (set by qdisc on the interface), which is then used to grab the transmission driver queue.

1qid = skb_get_queue_mapping(skb);2tx_ring = &adapter->tx_ring[qid];ena_check_and_linearize_skb is then called to linearize the socket buffer (in case it is fragmented), followed by ena_tx_map_skb to map the socket buffer so it can be DMAed.

1ena_check_and_linearize_skb(tx_ring, skb);2ena_tx_map_skb(tx_ring, tx_info, skb, &push_hdr, &header_len);Next is to grab a transmission buffer called tx_info from tx_ring. This structure is used to describe the current unit of transmission and is used for later stages in transmission (e.g. completion).

1next_to_use = tx_ring->next_to_use;2/* req_id help deal with out-of-order transmission */3req_id = tx_ring->free_ids[next_to_use];4tx_info = &tx_ring->tx_buffer_info[req_id];5/* Also in ena_start_xmit */6tx_info->tx_descs = nb_hw_desc;7tx_info->last_jiffies = jiffies;8tx_info->print_once = 0;To communicate with the adapter, struct ena_com_tx_ctx named ena_tx_ctx is then built, using values inside tx_info.

1ena_tx_ctx.ena_bufs = tx_info->bufs;2ena_tx_ctx.push_header = push_hdr;3ena_tx_ctx.num_bufs = tx_info->num_of_bufs;4ena_tx_ctx.req_id = req_id;5ena_tx_ctx.header_len = header_len;Packet descriptor(s) of type ena_eth_io_tx_desc (this structure is part of the adapter's hardware specification, and cannot be changed) is then added to the submission queue though ena_com_prepare_tx.

1ena_com_prepare_tx(tx_ring->ena_com_io_sq, &ena_tx_ctx, &nb_hw_desc);At this point, the preparation for the socket buffer to be sent is done; it is left to the adapter to send out the data.

Before exit ena_start_xmit update the internal statistics.

1tx_ring->tx_stats.cnt++;2tx_ring->tx_stats.bytes += skb->len;And next_to_use is incremented, so a new request ID will be used the next time for this tx_ring.

1tx_ring->next_to_use = ENA_TX_RING_IDX_NEXT(next_to_use, tx_ring->ring_size);Completion

ena_start_xmit tell the ena adapter to send data, but it doesn't wait for adapter to finish, as the network is asynchronous and high-latency by nature.

When the adapter successfully sends out the data, an interrupt is raised (the same interrupt when a packet arrives at the adaptor), causing the interrupt handler ena_intr_msix_io to run, and as explained in NAPI Setup, eventually calling ena_io_poll.

The majority of ena_io_poll will be left for the next section Reception. Right now, we are only interested that it calls ena_clean_tx_irq.

ena_clean_tx_irq calls ena_com_tx_comp_req_id_get in a loop to process completion event (for as many packets as the budget allows), unmapping the socket buffer and cleaning up the transmission buffer for reuse.

1static int ena_clean_tx_irq(struct ena_ring *tx_ring, u32 budget)2{3 int next_to_clean = tx_ring->next_to_clean;4 while (tx_pkts < budget) {5 rc = ena_com_tx_comp_req_id_get(tx_ring->ena_com_io_cq, &req_id);6 if (rc)7 break;8 9 tx_info = &tx_ring->tx_buffer_info[req_id];10skb = tx_info->skb;11ena_unmap_tx_skb(tx_ring, tx_info);12tx_info->skb = NULL;13tx_info->last_jiffies = 0; 14 15 tx_ring->free_ids[next_to_clean] = req_id;16 next_to_clean = ENA_TX_RING_IDX_NEXT(next_to_clean, tx_ring->ring_size);17 }18 /* Omitted... */19}Reception

When a packet arrives at ENA device, an interrupt is raised, triggering the interrupt handler ena_intr_msix_io, which schedules NAPI. The actual reception of occurs later in ena_clean_rx_irq, which is called inside ena_io_poll (see NAPI Setup).

ena_clean_rx_irq run a loop to process as many packets as allowed by the NAPI budget. For each packet, it builds the received packet context/metadata ena_rx_ctx with ena_com_rx_pkt.

1static int ena_clean_rx_irq(struct ena_ring *rx_ring, struct napi_struct *napi, u32 budget)2{3 do {4 /* Receive single packet */5 ena_rx_ctx.ena_bufs = rx_ring->ena_bufs;6 ena_rx_ctx.max_bufs = rx_ring->sgl_size;7 ena_rx_ctx.descs = 0;8 ena_com_rx_pkt(rx_ring->ena_com_io_cq,9 rx_ring->ena_com_io_sq,10 &ena_rx_ctx);11 12 /* Omitted... */13

14 budget--;15 } while (likely(budget));16 /* Omitted... */17}The ena_com_rx_pkt ask for the number of descriptor used for a new unhandled packet (through ena_com_cdesc_rx_pkt_get) and retrieve each packet descriptor ena_eth_io_rx_cdesc_base (part of adaptor's hardware specification) through ena_com_rx_cdesc_idx_to_ptr; then finally stores information inside ena_rx_ctx,

1int ena_com_rx_pkt(struct ena_com_io_cq *io_cq,2 struct ena_com_io_sq *io_sq,3 struct ena_com_rx_ctx *ena_rx_ctx)4{5 nb_hw_desc = ena_com_cdesc_rx_pkt_get(io_cq, &cdesc_idx);6

7 for (i = 0; i < nb_hw_desc; i++) {8 cdesc = ena_com_rx_cdesc_idx_to_ptr(io_cq, cdesc_idx + i);9

10 ena_buf->len = cdesc->length;11 ena_buf->req_id = cdesc->req_id;12 ena_buf++;13 }14

15 /* Update SQ head ptr */16 io_sq->next_to_comp += nb_hw_desc;17

18 /* Get rx flags from the last pkt */19 ena_com_rx_set_flags(ena_rx_ctx, cdesc);20

21 ena_rx_ctx->descs = nb_hw_desc;22 return 0;23}With ena_rx_ctx ready, the next step is to get the socket buffer with ena_rx_skb.

1/* Build ena_rx_ctx... */2

3/* allocate skb and fill it */4skb = ena_rx_skb(rx_ring, rx_ring->ena_bufs, ena_rx_ctx.descs, &next_to_clean);5

6ena_rx_checksum(rx_ring, &ena_rx_ctx, skb);7ena_set_rx_hash(rx_ring, &ena_rx_ctx, skb);8skb_record_rx_queue(skb, rx_ring->qid);9

10if (rx_ring->ena_bufs[0].len <= rx_ring->rx_copybreak) {11 total_len += rx_ring->ena_bufs[0].len;12 rx_copybreak_pkt++;13 napi_gro_receive(napi, skb);14} else {15 total_len += skb->len;16 napi_gro_frags(napi);17}The socket buffer is then passed to the network stack with either napi_gro_receive (for small packets that is not fragmented) or napi_gro_frags (for large fragmented packets), using GRO to speed up data processing.

Similar to Transmission, statistics are updated to reflect the latest status.

1rx_ring->per_napi_packets += work_done;2rx_ring->rx_stats.bytes += total_len;3rx_ring->rx_stats.cnt += work_done;4rx_ring->rx_stats.rx_copybreak_pkt += rx_copybreak_pkt;Timeout

As packets that timed-out (perhaps the adapter lost it?) will not result in completion, we need other means to deal with a timeout. This is where ena_timer_service comes into play; it calls check_for_missing_completions to check on all the transmission and reception queues.

1for (i = adapter->0; i < adapter->num_io_queues; i++) {2 tx_ring = &adapter->tx_ring[i];3 rx_ring = &adapter->rx_ring[i];4

5 if (check_missing_comp_in_tx_queue(adapter, tx_ring))6 return;7

8 if (check_for_rx_interrupt_queue(adapter, rx_ring))9 return;10}If too many packets timed-out for transmission or too many interrupt issues for reception occurred, the adaptor will be reset.

Discussion and Conclusion

As can see from the code, the use of multiple queues greatly simplified the locking, since each the Transmission/Reception queue pair is only manipulated by a single CPU (and not touched in interrupt handler).

Many details about the ENA driver have been left out, namely the Asynchronous Event Notification Queue (AENQ), Admin Queue (AQ), Admin Completion Queue (ACQ), ethtools operations, and much more. Hopefully, future posts can look into those as well.

Appendix I. Data Structure

This section explains the major data structures used inside the ENA driver and serves as a supplement to the main article.

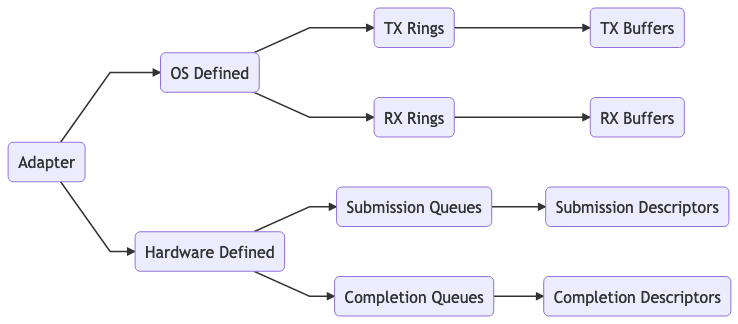

The above diagram is the high-level hierarchical view of the relationship between the data structures.

Adapter

Represented by ena_adapter, most of the data associated with a device at the of net_device (pointed by priv), with PCI specific data stored elsewhere in ena_com_dev (probably to keep the private data small).

1struct ena_adapter {2 struct ena_com_dev *ena_dev;3

4 /* TX */5 struct ena_ring tx_ring[ENA_MAX_NUM_IO_QUEUES];6 /* RX */7 struct ena_ring rx_ring[ENA_MAX_NUM_IO_QUEUES];8};9

10struct ena_com_dev {11 struct ena_com_admin_queue admin_queue;12 struct ena_com_aenq aenq;13 struct ena_com_io_cq io_cq_queues[ENA_TOTAL_NUM_QUEUES];14 struct ena_com_io_sq io_sq_queues[ENA_TOTAL_NUM_QUEUES];15 u8 __iomem *reg_bar;16 void __iomem *mem_bar;17 void *dmadev;18};Driver Queue

ena_ring represents a driver queue (sometimes simply called ring buffer), the actual location of the ring buffer can be found by either looking into tx_buffer_info or rx_buffer_info, depending on whether this is a queue used for transmission or reception.

1struct ena_ring {2 /* Holds the empty requests for TX/RX3 * out of order completions4 */5 u16 *free_ids;6

7 /* Number of tx/rx_buffer_info's entries.8 * This use the calculated value of ena_calc_io_queue_size,9 * defaulting to 1024 (ENA_DEFAULT_RING_SIZE).10 */11 int ring_size;12 union {13 struct ena_tx_buffer *tx_buffer_info;14 struct ena_rx_buffer *rx_buffer_info;15 };16

17 /* cache ptr to avoid using the adapter */18 struct ena_com_io_cq *ena_com_io_cq;19 struct ena_com_io_sq *ena_com_io_sq;20 21 /* cpu for TPH */22 int cpu;23

24 struct ena_com_rx_buf_info ena_bufs[ENA_PKT_MAX_BUFS];25};Buffer

These are the data structure inside struct ena_ring that represents the packet buffers.

Transmission

ena_tx_buffer is a high-level representation of a single transmission buffer, normally stored in a variable named tx_info. It is associated with a single socket buffer and one or more descriptors.

1struct ena_tx_buffer {2 struct sk_buff *skb;3 /* num of ena desc for this specific skb4 * (includes data desc and metadata desc)5 */6 u32 tx_descs;7 /* num of buffers used by this skb */8 u32 num_of_bufs;9

10 /* Save the last jiffies to detect missing tx packets11 */12 unsigned long last_jiffies;13 struct ena_com_buf bufs[ENA_PKT_MAX_BUFS];14};Reception

ena_rx_buffer is a high-level representation of a single reception buffer, normally stored in a variable named rx_info, it also associates with a single socket buffer.

1struct ena_rx_buffer {2 struct sk_buff *skb;3 struct page *page;4 u32 page_offset;5 struct ena_com_buf ena_buf;6};DMA Queue

The ena_com_io_sq represents a submission queue and ena_com_io_cq represents a completion queue.

The actual location that stores the packet descriptors is elsewhere, pointed by either desc_addr (for submission queue) or cdesc_addr (completion queue), and allocated with dma_alloc_coherent.

1/* ena_com_io_cq's structure is roughly the same */2struct ena_com_io_sq {3 /* Points to the actual location of the4 * descriptor queue/ring buffer.5 */6 struct ena_com_io_desc_addr desc_addr;7

8 /* For transmission or reception? */9 enum queue_direction direction;10

11 u32 msix_vector;12 13 /* How many descriptor can this queue holds.14 * Should be the same as the corresponding15 * ring_size of the corresponding ena_ring.16 */17 u16 q_depth;18 u16 qid;19

20 u16 idx;21 /* head for ena_com_io_cq */22 u16 tail;23 u16 next_to_comp;24 /* Size of each descriptor */25 u8 desc_entry_size;26};The submission queues hold socket buffers submitted by the driver. For the transmission submission queues, the socket buffers hold data that awaits submission. For the reception submission queues, the socket buffers await the adapter to write data inside.

Completion queues, on the other hand, are used to signal which of the socket buffers that are referenced inside the submission queue has been processed. For the transmission submission queues, this means the data inside the socket buffer has been sent. For the reception submission queues, this means the socket buffers now hold packet data.

Descriptors

The actual descriptors accessed or written directly by the adapter. They describe the memory area that the adapter should read/write. All of them, except completion descriptor ena_eth_io_tx_cdesc, are 16 bytes in size.

ena_eth_io_tx_descena_eth_io_tx_meta_descena_eth_io_tx_cdescena_eth_io_rx_descena_eth_io_rx_cdesc_base